January 18, 2021

Databricks Community Edition: A Beginner’s Guide - Part 2

DURATION

15mincategories

Tags

share

Welcome back!

In the previous Blog, we discussed at length about Databricks Community Edition along with ‘The Data Science Workspace’. To reiterate about Databricks:

“It is a unified data platform to accelerate innovation across Data Science, Data Engineering and Business Analytics. It leverages Apache Spark for computational capabilities and supports several programming languages such as Python, R, Scala and SQL for code formulation. Databricks is one of the fastest growing data services on AWS and Azure with 5000+ customer and 450+ partners across the globe.”

Let’s explore the platform architecture.

A Peep into Unified Analytics Platform

Databricks is one cloud platform for massive scale data engineering and collaborative data science. The three major constituents of Databricks Platform are-

1. The Data Science Workspace

2. Unified Data Services

3. Enterprise Cloud Services

We will now explain the ‘Unified Data Services’ in detail.

The Databricks Unified Data Service provides a reliable and scalable platform for data pipelines, data lakes, and data platforms. It helps manage the complete data journey to make data ingestion, processing, storing, and exporting data as per requirement. In crux, it helps you manage your complete Data Journey by facilitating the following-

1. Data Ingest: Unified Data Services (UDS) leverages a library of connectors, integrations, and APIs to pull data from different data sources, data storages, and data types, including batch and streaming data.

2. Data Pipelines: UDS supports scalable and reliable data pipelines and uses Scala, Python, R, or SQL to run processing jobs quickly on distributed Spark runtimes, without the users having to worry about the underlying compute.

3. Data Lakes: UDS helps to build reliable data lakes at scale. It assures improved data quality, optimized storage performance, and manages stored data- all while maintaining data lake security and compliance.

4. Data Consumers: UDS makes it easy to use your data lake as a shared source of truth across Data Science, Machine Learning, and Business Analytics teams — BI dashboards, production models, and everything in-between.

Some profile specific benefits offered by UDS are as follows-

Interesting, right?

Read more about Unified Data Service from Introduction to Unified Data Service.

Furthermore, Unified Data Service is sub-divided into 3 categories to support its efficient functioning, which is as follows-

1. Delta Lake:

Enterprises have been spending a hefty amount on getting data into data lakes with Apache Spark. The underlying aspiration is to apply Data Science and ML on the data using Apache Spark. However, the data in data lakes is not ready for data science and ML. As a result, majority of these projects fail due to unreliable data and henceforth the poor performance.

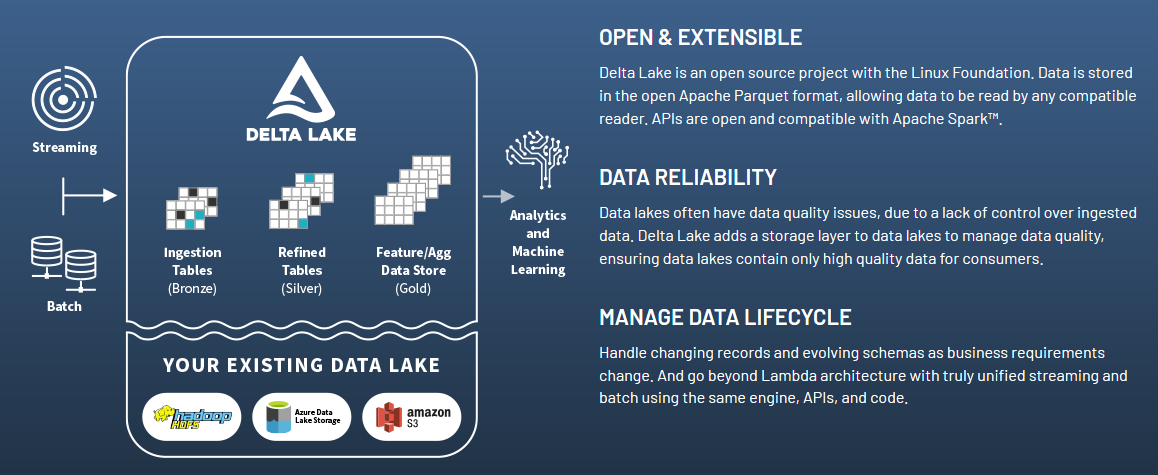

To address this issue, Databricks has come up with a new standard of building data lakes that makes data ready for analytics- Delta Lake.

Delta Lake is an open source storage layer that brings reliability to data lakes. It is a technology that builds robust data lakes and is a vital component for building your cloud data platform. Delta Lake provides ACID transactions, scalable metadata handling, and unifies streaming along with batch data processing. Delta Lake runs on top of your existing data lake and is fully compatible with Apache Spark APIs. Delta Lake on Azure Databricks allows you to configure Delta Lake based on your workload patterns. Azure Databricks also includes Delta Engine, which provides optimized layouts and indexes for fast interactive queries. Specifically, key features of Delta Lake are as follows:

ACID transactions on Spark: Data lakes typically have multiple data pipelines reading and writing data concurrently, and data engineers are required to go through the tedious process to ensure data integrity, due to the lack of transactions. Delta Lake brings ACID transactions to data lakes. It provides serializability- the strongest level of isolation level.

Scalable metadata handling: In big data, even the metadata itself can be “big data”. Delta Lake treats metadata just like data, leveraging Spark’s distributed processing power to handle all its metadata. As a result of this, Delta Lake can handle petabyte-scale tables with billions of partitions and files at ease.

Streaming and batch unification: A table in Delta Lake is a batch table as well as a streaming source and sink. Streaming data ingest, batch historic backfill, and interactive queries all just work out of the box.

Schema enforcement: Delta Lake provides the ability to specify your schema and enforce it. This helps ensure that the data types are correct and required columns are present, preventing bad data from causing data corruption.

Time travel: Delta Lake provides snapshots of data enabling developers to access and revert to earlier versions of data for audits, rollbacks or to reproduce experiments. Data versioning enables full historical audit trails, and reproducible machine learning experiments.

Upserts and deletes: Delta Lake supports Scala and Java APIs to merge, update and delete datasets. This allows you to easily comply with GDPR and CCPA and simplifies use cases like change data capture. Additionally, it supports merge, update and delete operations to enable complex use cases like change-data-capture, slowly-changing-dimension (SCD) operations and streaming upserts.

100% compatibility with Apache Spark APIs: Developers can use Delta Lake with their existing data pipelines with minimal change as it is fully compatible with Spark, the most popularly used big data processing engine.

Together, the features of Delta Lake improve both the manageability and performance of working with data in cloud storage objects, and enable a “lakehouse” paradigm that combines the key features of data warehouses and data lakes: standard DBMS management functions usable against low-cost object stores.

Delta Engine optimizations make Delta Lake operations highly performant, supporting a variety of workloads ranging from large-scale ETL processing to ad-hoc, interactive queries. Many of these optimizations take place automatically; you get the benefits of these Delta Engine capabilities just by using Databricks for your data lakes. You can read more about Delta Engine from here Introduction to Delta Engine.

Figure 1: Delta Lake Architecture with benefits.

2. Databricks Runtime:

The Databricks Runtime is a data processing engine built on highly optimized version of Apache Spark, promising up to 50x performance gains. It runs on auto-scaling infrastructure for easy self-service without DevOps, while also providing high security and administrative controls needed for production. It also builds pipelines, schedule jobs, and train models faster. The faster adoption of Databricks Runtime accounts to the following key benefits offered-

Performance: The Databricks Runtime has been highly optimized by the original creators of Apache Spark. The significant increase in performance enables new use cases not previously possible for data processing and pipelines and improves data team productivity.

Cost Effective: The runtime leverages auto-scaling compute and storage to manage infrastructure costs. Clusters intelligently start and terminate, and the high cost-to-performance efficiency reduces infrastructure spend.

Simplicity: Databricks has wrapped Spark with a suite of integrated services for automation and management to make it easier for data teams to build and manage pipelines, while giving IT teams administrative control.

Databricks Runtime has gained popularity due to the following key benefits-

Caching: Copies of remote files are cached in local storage using a fast-intermediate data format, which results in improved successive read speeds of the same data.

Elastic On-Demand Clusters: Build on-demand clusters in minutes with a few clicks and scale up or down based on your current needs. Users can also reconfigure, or reuse resources based on changes in data teams or services.

Backward Compatibility with Automatic Upgrades: Users can choose the version of Spark based on their requirements, ensuring legacy jobs can continue to run on previous versions, while getting the latest version of Spark hassle free.

Flexible Scheduler: Customers can execute jobs for production pipelines on a specified schedule- from minute to monthly intervals in different time zones, including cron syntax and relaunch policies.

Notifications: The users are notified as the production job starts, fails, and/or completes with zero human intervention, through email or third-party production pager integration to give them a hassle-free work experience.

Curious about how the backend works?

The Databricks Runtime implements the open Apache Spark APIs with a highly optimized execution engine, that provides significant performance gains compared to standard open source Apache Spark found on other cloud Spark platforms. This core engine is then wrapped with additional services for developer productivity and enterprise governance. The image below would give you a pictorial depiction of the same-

3. BI Reporting on Delta Lakes:

BI Reporting on Delta Lake helps in applying business analytics on your data lake. Users can directly connect to recent data in their data lake with Delta Lake and SparkSQL and use your preferred BI visualization and reporting tools for regular and detailed business insights.

Databricks runs over technologies like Apache Spark, Delta Lake, TensorFlow, MLflow, Redash and R. In this blog, we will walk you through ‘Apache Spark’.

What is Apache Spark?

Apache Spark is a unified analytics engine for large-scale data processing which is based on Hadoop MapReduce and extends the MapReduce model to efficiently use it for more types of computations that includes interactive queries and stream processing. It is a high-speed cluster computing technology designed for fast computation. The principal feature of Spark is the in-memory cluster computing that increases the processing speed of an application.

Spark is designed to assist a broad-spectrum of workloads such as batch applications, streaming, interactive queries and iterative algorithms. Alongside this, it also reduces the management burden of maintaining separate tools. Some of its features are as follows-

Speed: Spark runs application in Hadoop cluster which are up to 10 times faster when running on disk and 100 times faster in memory, which is possible by reducing number of read/write operations to disk. It achieves high performance for both batch and streaming data using a state-of-the-art DAG scheduler, a query optimizer, and a physical execution engine.

Supports multiple languages: Apache Spark has built-in APIs in Java, Python, R, SQL and Scala. Henceforth, users can code application in different languages. Spark offers over 80 high-level operators that makes it easy to build parallel applications and you can use it interactively from the Scala, Python, R, and SQL shells.

Advanced Analytics: Spark powers a stack of libraries including SQL and DataFrames, MLlib for machine learning, GraphX, and Spark Streaming. Users can easily combine these libraries seamlessly in the same application and apply advanced analytics on their dataset.

Runtime platform independence: Apache Spark can run using its standalone cluster mode, on EC2, on Hadoop YARN, on Mesos, or on Kubernetes. Access data in HDFS, Alluxio, Apache Cassandra, Apache HBase, Apache Hive and hundreds of other data sources, making it platform independent.

Yes, you heard us right!

Apache Spark supports all these functionalities, and which is why it is a part of Databricks. You can explore more about Spark using the links below-

Introduction to Apache Spark

Introduction to Apache Spark 3.0

Apache Spark- Video Content

You can read more about Delta Lakes from-

Delta Lakes-Databricks

Delta Lakes- Microsoft

Delta Lake- Video Content

We know you are intrigued, right? Then why wait?

Explore how Databricks can helps individuals and organizations adopt a Unified Data Analytics approach for better performance and keeping ahead of the competition.

Sign up to the community version of Databricks and dive into a plethora of computing capabilities.

Databricks Sign Up

Alternatively, you can read more about Databricks from here:

Databricks Website

Databricks Concepts

Video Content on Databricks